Developing innovative technologies like quantum computing, artificial intelligence (AI) and machine learning (ML) can give significant benefits. Both AI and ML use large pools of data to predict patterns and draw conclusions, which can be especially helpful for optimizing a quantum computing system. Recently, researchers at the Flatiron Institute’s Center for Computational Quantum Physics (CCQ), were able to apply ML technology to a particularly difficult quantum physics problem, reducing the system from needing 100,000 equations to only four equations, without lowering accuracy. As the Flatiron Institute is part of the Simons Foundation and works to advance scientific methods, the researchers published their findings in Physical Review Letters.

Looking into the Hubbard Model

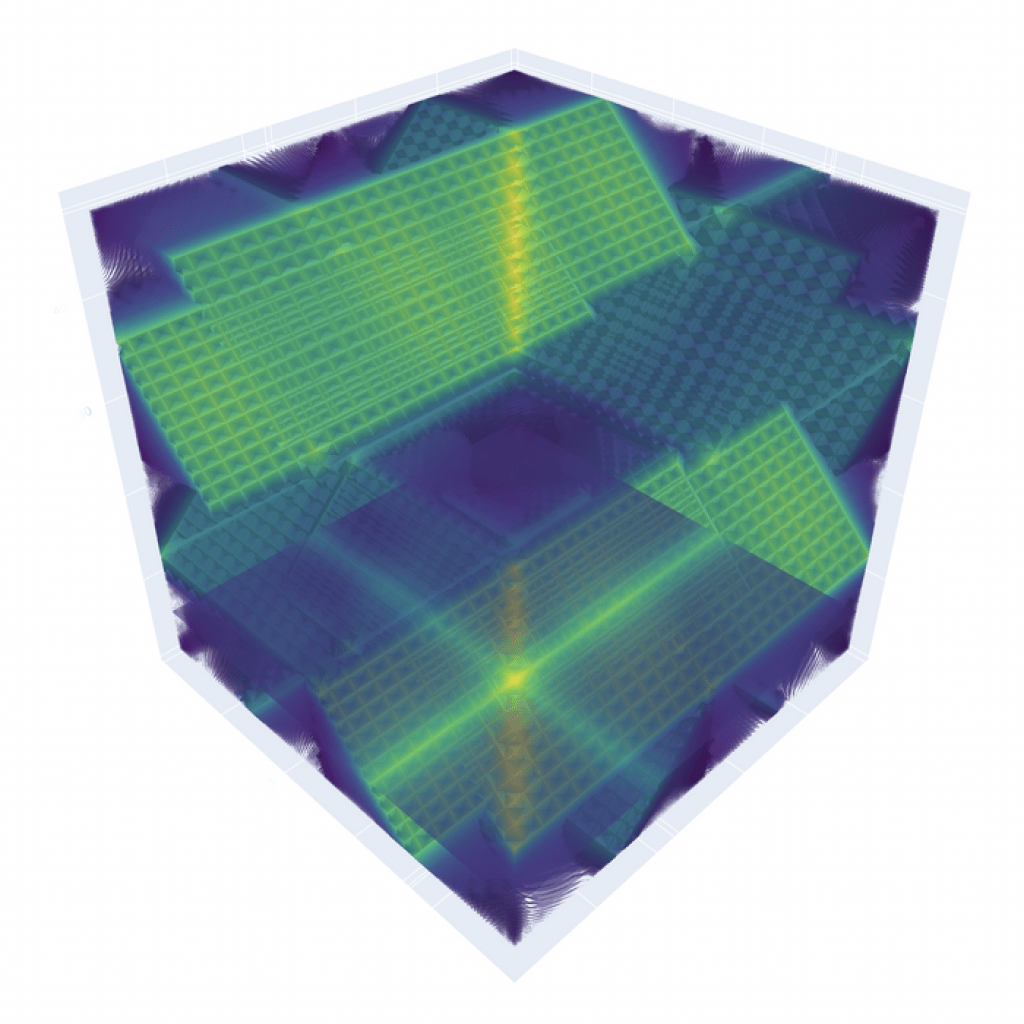

The difficult quantum physics problem in question focused on how electrons interacted with each other in a lattice. Lattices are often utilized in quantum research and are made using a grid of special lasers. Within the lattice, electrons can interact with each other if they’re in the same spot, adding noise to the system and skewing the results. This system, also called the Hubbard model, has been a difficult puzzle for quantum scientists to solve. According to lead researcher Domenico Di Sante, an Affiliate Research Fellow at the CCQ: “The Hubbard model…features just two ingredients: the kinetic energy of electrons (the energy associated with moving electrons on a lattice) and the potential energy (the energy that wants to impede the movement of electrons). It is believed to encode fundamental phenomenologies of complex quantum materials, including magnetism, and superconductivity.”

While the Hubbard model may seem simple, it is anything but. The electrons within the lattice can interact in hard-to-predict ways, including becoming entangled. Even if the electrons are in two different places within the lattice, they have to be treated at the same time, forcing scientists to deal with all the electrons at once. “There is no exact solution to the Hubbard model,” added Di Sante. “We must rely on numerical methods.” To overcome this quantum physics problem, many physicists use a renormalization group. It’s a mathematical method that can study how a system changes when scientists modify different input properties. But, in order for a renormalization group to work successfully, it has to keep track of all possible outcomes of electron interactions, leading to at least 100,000 equations needing to be solved. Di Sante and his fellow researchers hoped that using ML algorithms could make this challenge significantly easier.

The researchers used a specific type of ML tool, called a neural network, to try to solve the quantum physics problem. The neural network used specific algorithms to detect a small set of equations that would generate the same solution as the original 100,000 equation renormalization groups. “Our deep learning framework attempts to reduce dimensionality from hundreds of thousands or millions of equations to a small handful (down to 32 or even four equations),” Di Sante said. “We used an encoder-decoder design to compress (squeeze) the vertex into this small, ‘latent’ space. In this latent space (imagine this as looking ‘under the hood’ of the neural network), we used a novel ML method called neural ordinary differential equation to learn the solutions of these equations.”

Solving Other Difficult Quantum Physics Problems

Thanks to the neural network, the researchers found that they could use significantly fewer equations to study the Hubbard model. While this result shows clear success, Di Sante understood that there is still much more work to be done. “Interpreting machine learning architecture is not a simple task,” he stated. “Often, neural networks work very well as black boxes with little understanding of what is learning. Our efforts right now are focused around methods for better understanding the connection between the handful of learned equations and the actual physics of the Hubbard model.”

Still, the initial findings of this research suggest big implications for other quantum physics problems. “Compressing the vertex (the central object that encodes the interaction between two electrons) is a big deal in quantum physics for quantum interacting materials,” explained Di Sante. “It saves memory, and computational power, and offers physical insight. Our work, once again, demonstrated how machine learning and quantum physics intersect constructively.” These impacts may also be able to translate to similar issues within the quantum industry. “The field is facing the same problem: having large, high-dimensional data that needs compression in order to manipulate and study,” Di Sante added. “We hope that this work on the renormalization group can help or inspire new approaches in this subfield as well.”

Kenna Hughes-Castleberry is a staff writer at Inside Quantum Technology and the Science Communicator at JILA (a partnership between the University of Colorado Boulder and NIST). Her writing beats include deep tech, the metaverse, and quantum technology.