IBM’s Quantum Computing Compromise—a Road to Scale?

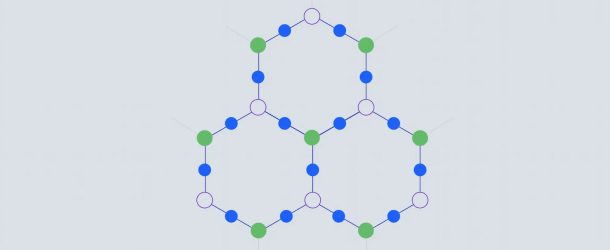

(SpectrumIEEE) As of this week (8 August), all of Big Blue’s quantum processors will use a hexagonal layout that features considerably fewer connections between qubits—the quantum equivalent of bits—than the square layout used in its earlier designs and by competitors Google and Rigetti Computing.

This is the culmination of several years of experimentation with different processor topologies, which describe a device’s physical layout and the connections between its qubits. The company’s machines have seen a steady decline in the number of connections despite the fact its own measure of progress, which it dubs “quantum volume”, gives significant weight to high connectivity.

connectivity comes at a cost, says IBM researcher Paul Nation. Today’s quantum processors are error-prone, and the more connections between qubits, the worse the problem gets. Scaling back that connectivity resulted in an exponential reduction in errors.

“In the short term it’s painful,” says Nation. “But the thinking is not what is best today, it’s what is best for tomorrow.”

IBM first introduced the so-called “heavy-hex” topology last year, and the company has been gradually retiring processors with alternative layouts. After this week, all of the more than 20 processors available on the IBM Cloud will rely on the design. And Nation says heavy hex will be used in all devices outlined in its quantum roadmap.

The layout represents a significant reduction in connections from the square lattice used in the company’s earlier processors—as well as most other quantum computers that rely on superconducting qubits. The decision was driven by the kind of qubits that IBM uses, says Nation. The qubits used by companies like Google and Rigetti can be tuned to respond to different microwave frequencies, but IBM’s are fixed at fabrication. This makes them easier to build and reduces control system complexity, says Nation.